I just wrote an article for Mobile Orchard on how to get started with OpenGL ES 2.0 on the iPhone 3GS. It goes over all the steps necessary to set up a barebones project that renders a quad on screen using a vertex and a fragment shader. It’s not the most stunning thing ever visually, but I think it makes for a good starting point for OpenGL ES 2.0 projects.

I just wrote an article for Mobile Orchard on how to get started with OpenGL ES 2.0 on the iPhone 3GS. It goes over all the steps necessary to set up a barebones project that renders a quad on screen using a vertex and a fragment shader. It’s not the most stunning thing ever visually, but I think it makes for a good starting point for OpenGL ES 2.0 projects.

Graphics

There are 11 posts filed in Graphics (this is page 2 of 3).

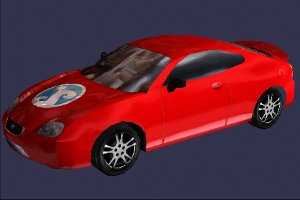

Environment Mapping Demo With OpenGL ES 1.1

I just finished creating a graphics demo for a chapter I’m writing for the book iPhone Advanced Projects edited by Dave Mark. In the chapter I go over a few different lighting techniques and go in detail on how to do masked environment mapping on an iPhone 3G with OpenGL ES 1.1.

I just finished creating a graphics demo for a chapter I’m writing for the book iPhone Advanced Projects edited by Dave Mark. In the chapter I go over a few different lighting techniques and go in detail on how to do masked environment mapping on an iPhone 3G with OpenGL ES 1.1.

The demo ended up looking pretty good, so I decided to upload a quick video showing the different lighting modes:

- Diffuse texture only

- Diffuse texture plus ambient and diffuse lighting

- Diffuse texture plus ambient, diffuse, and specular lighting (as usually, per-vertex lighting looks pretty bad, even though this is a relatively high-poly model)

- Fully reflective environment map (using the normal environment map technique)

- Environment map added to the diffuse texture and lighting

- Environment map with a reflection mask plus diffuse texture and lighting (two passes on an iPhone 3G–or one pass if you’re not using the diffuse alpha channel)

The chapter in the book will go in detail into each of those techniques, building up to the last one. Full source code will be included as well.

Some of these techniques will also be covered in my upcoming Two Day iPhone OpenGL Class organized in collaboration with Mobile Orchard.

Teaching a Two-Day OpenGL iPhone Class. Register Now!

I’m excited to announce the intensive, two-day class on OpenGL for the iPhone that I’ll be teaching. The class will be held September 26th-27th, in Denver, right before the 360iDev conference, and it’s part of the Mobile Orchard Workshops.

I’m excited to announce the intensive, two-day class on OpenGL for the iPhone that I’ll be teaching. The class will be held September 26th-27th, in Denver, right before the 360iDev conference, and it’s part of the Mobile Orchard Workshops.

The class is aimed at iPhone developers without previous OpenGL experience. It’s going to be very hands-on, and you’ll create both 2D and 3D applications during the weekend. You’ll learn all the basics: cameras, transforms, and how to draw meshes, but we’ll also cover some more advanced topics such as lighting, multitexturing, point sprites, and even render targets. Most importantly, you’ll walk away with a solid understanding of the basis, which will allow you to continue learning OpenGL and advanced computer graphics on your own from the docs, samples, or even browsing the API directly.

The main requirement for the class is that you’re familiar with the iPhone development environment and that you have basic knowledge of the C language. Beyond that, to the the most out of the course, you should be familiar with the basics of linear algebra (vector, matrices, and dot products). Anything else, we’ll cover it all during the class.

Registration is now open, and you can get some great discounts by registering early and attending the 360iDev conference. For more details, check the official announcement page.

Hope to see some of you there!

A Huge Leap Forward: Graphics on The iPhone 3G S

I just wrote an article over at Mobile Orchard about the new graphics capabilities on the new iPhone 3G S. Check it out to find out the details, and what those new features might mean for us developers.

Remixing OpenGL and UIKit

Yesterday I wrote about OpenGL views, and how they can be integrated into a complex UI with multiple view controllers. There’s another interesting aspect of integrating OpenGL and UIKit, and that’s moving images back and forth between the two systems. In both cases, the key enabler is the CGContext class.

From UIKit to OpenGL

This is definitely the most common way of sharing image data. Loading an image from disk in almost any format is extremely easy with Cocoa Touch. In fact, it couldn’t get any easier:

UIImage* image = [UIImage imageNamed:filename];

After being used to image libraries like DevIL, it’s quite a relief to be able to load a file with a single line of code and no chance of screwing anything up. So it makes a lot of sense to use this as a starting point for loading OpenGL textures. So far we have a UIImage. How do we get from there to a texture?

All we have to do is create a CGContext with the appropriate parameters, and draw the image onto it:

byte* textureData = (byte *)malloc(m_width * m_height * 4); CGContext* textureContext = CGBitmapContextCreate(textureData, m_width, m_height, 8, m_width * 4, Â Â Â Â Â Â Â Â Â Â Â CGImageGetColorSpace(image), kCGImageAlphaPremultipliedLast); CGContextDrawImage(textureContext, CGRectMake(0.0, 0.0, (CGFloat)m_width, (CGFloat)m_height), image); CGContextRelease(textureContext);

At that point, we’ll have the image information, fully uncompressed in RGBA format in textureData. Creating an OpenGL texture from that is done like we always do:

glGenTextures(1, &m_handle); glBindTexture(GL_TEXTURE_2D, m_handle); glTexImage2D(GL_TEXTURE_2D, 0, GL_RGBA, m_width, m_height, 0, GL_RGBA, GL_UNSIGNED_BYTE, textureData);

And we’re done.

A word of advice: This is a quick way to load textures and get started, but it’s not the ideal way I would recommend for a shipping product. This code is doing a lot of work behind the scenes: loading the file, decompressing the image into an RGBA array, allocating memory, copying it over to OpenGL, and, if you set the option, generating mipmaps. All of this at load time. Ouch! If you have more than a handful of textures, that’s going to be a pretty noticeable delay while loading.

Instead, it would be much more efficient to perform all the work offline, and prepare the image into the final format that you want to use with OpenGL. Then you can load that data directly into memory and call glTextImage2D on it directly. Not as good as having direct access to video memory and loading it there directly, but that’s as good as it’s going to get on the iPhone. Fortunately Apple provides a command-line tool called texturetool that does exactly that, including generating mipmaps.

Another use of transferring image data from UIKit to OpenGL beyond loading textures is to use the beautiful and full-featured font rendering in UIKit in OpenGL applications. To do that, we render a string into the CGContext:

CGColorSpaceRef   colorSpace = CGColorSpaceCreateDeviceGray(); int sizeInBytes = height*width; void* data = malloc(sizeInBytes); memset(data, 0, sizeInBytes); CGContextRef context = CGBitmapContextCreate(data, width, height, 8, width, colorSpace, kCGImageAlphaNone); CGColorSpaceRelease(colorSpace); CGContextSetGrayFillColor(context, grayColor, 1.0); CGContextTranslateCTM(context, 0.0, height); CGContextScaleCTM(context, 1.0, -1.0); UIGraphicsPushContext(context);    [txt drawInRect:CGRectMake(destRect.left, destRect.bottom, destRect.Width(), destRect.Height()) withFont:font                         lineBreakMode:UILineBreakModeWordWrap alignment:UITextAlignmentLeft]; UIGraphicsPopContext();

There are a couple things that are a bit different about this. First of all, notice that we’re using a gray color space. That’s because we’re rendering the text into a grayscale, alpha-only texture. Otherwise, a full RGBA texture would be a waste. Then there’s all the transform stuff thrown in the middle. That’s because the coordinate system for OpenGL is flipped with respect to UIKit, so we need to inverse the y.

Finally, we can create a new texture from that data, or update an existing texture:

glBindTexture(GL_TEXTURE_2D, m_handle); glTexSubImage2D(GL_TEXTURE_2D, 0, 0,0, m_width, m_height, GL_ALPHA, GL_UNSIGNED_BYTE, data);

From OpenGL to UIKit

This is definitely the more uncommon way of transferring image data. You would use this when saving something rendered with OpenGL back to disk (like game screenshots), or even when using OpenGL-rendered images on UIKit user interface elements, like I’m doing in my project.

The process is very similar to the one we just went over, but backwards, with a CGContext as the middleman.

We first start by rendering whatever image we want. You can do this from the back buffer, or from a different render target. I don’t know that it makes a difference in performance either way (these are not fast operations, so don’t try to do them every frame!). Then you capture the pixels from the image you just rendered into a plain array:

unsigned char buffer[width*(height+30)*4]; glReadPixels(0,0,width,height,GL_RGBA,GL_UNSIGNED_BYTE, &buffer);

By the way, notice the total awesomeness of the array declaration with non-constant variables. Thank you C99 (and gcc). Of course, that might not be the best thing to do with large images, since you might blow the stack size, but that’s another issue.

Anyway, once you have those pixels, you need to go through the slightly convoluted CGContext again to put it in a format that can be consumed directly by UIImage like this:

CGImageRef iref = CGImageCreate(width,height,8,32,width*4,CGColorSpaceCreateDeviceRGB(), Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â kCGBitmapByteOrderDefault,ref,NULL, true, kCGRenderingIntentDefault); uint32_t* pixels = (uint32_t *)malloc(imageSize); CGContextRef context = CGBitmapContextCreate(pixels, width, height, 8, width*4, CGImageGetColorSpace(iref), Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â kCGImageAlphaNoneSkipFirst | kCGBitmapByteOrder32Big); CGContextTranslateCTM(context, 0.0, height); CGContextScaleCTM(context, 1.0, -1.0); CGContextDrawImage(context, CGRectMake(0.0, 0.0, width, height), iref);Â Â Â CGImageRef outputRef = CGBitmapContextCreateImage(context); UIImage* image = [[UIImage alloc] initWithCGImage:outputRef]; free(pixels);

Again, we do the same flip of the y axis to avoid having inverted images.

Here the trickiest part was getting the color spaces correct. Apparently, even though it looks very flexible, the actual combinations supported in CGBitmapContextCreate are pretty limited. I kept getting errors because I kept passing an alpha channel combination that it didn’t like.

At this point, you’ll have the OpenGL image loaded an a UIImage, and you can do anything you want with it: Slap it on a button, save it to disk, or anything that strikes your fancy.