I haven’t started doing any real performance optimizations on my iPhone app yet. I pretty much ported it from the original PC version, saw that it was running rather slow (2-3 fps) so I changed the number of elements on the screen until it was an “acceptable” 15 fps and left it there until I had time to come back and optimize things towards the end.

I even ran it through some of the iPhone performance gathering tools and saw that it was spending half the time in the simulation and half the time rendering. A bit surprising, but it’s not like I had much to compare it against since this was my first performance-intensive iPhone app.

That was until this morning. Prompted by some of the comments in my previous post, I did a quick search about the iPhone floating-point performance. I had heard conflicting information on it: does it have hardware floating-point support? do you have to use fixed-point numbers for best performance? Apple is very tight-lipped about the specific hardware specs of the iPhone, which seems very strange to those of us coming from a console background. But people have been able to determine that the CPU is a 32-bit RISC ARM1176JZF. The good news is that it have a full floating-point unit, so we can continue writing math and physics code the way we do in most platforms.

The ARM CPU also has an architecture extension called the Thumb. It seems to be a special set of 16-bit bitcodes that claim to improve performance. I image the performance increase comes from a smaller code footprint and faster code fetching and processing. As a bonus, you also get a smaller memory footprint, so it seems like a win for a lot of mobile platforms. XCode comes with the option to generate Thumb code turned on by default.

But, and this is a huge but, it seems that floating point operations cause the program to switch back and form between Thumb mode and regular 32-bit mode. I would be interesting to look at the assembly generated, but I haven’t had time to do that yet. So the more floating-point calculations you do, the less of performance gain you’ll get from Thumb optimizations. Or, in the extreme, you might even get a performance degradation.

Most 3D games are very floating-point intenstive, and my app is no different, so I decided to turn off Thumb code generation on a whim. The results:

- Thumb code generation ON (default): 15 fps

- Thumb code generation OFF: 39 fps

Whoa!!! That’s a saving of 41 ms per frame! That has to be the optimization with most bang for the time spent on it that I’ve ever done. This also probably means that my app is now totally render-bound, which is good news. I’m sure I can optimize tons of stuff there

So if you’re doing any kind of a 3D game, turn off Thumb code generation. Now!

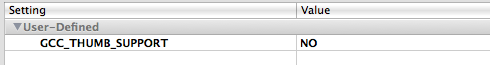

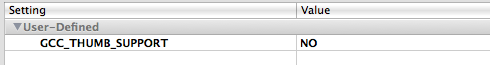

Edit: I realized I never explained how to turn the Thumb code generation off! Oops. Go to your project settings, add a new User-Defined setting called: GCC_THUMB_SUPPORT and set it to NO. That simple (but surprisingly there wasn’t an already existing setting to check it on and off).

![]() A couple of weeks ago, I decided to take a few days off from my project to enjoy the holidays with friends and family. Of course, I couldn’t stop thinking about the project. Soon my thoughts turned to how much more I had to go, and how some parts of the development process were still unknown to me, like the Apple submission process, or making a build for distribution on the App Store.

A couple of weeks ago, I decided to take a few days off from my project to enjoy the holidays with friends and family. Of course, I couldn’t stop thinking about the project. Soon my thoughts turned to how much more I had to go, and how some parts of the development process were still unknown to me, like the Apple submission process, or making a build for distribution on the App Store.