Lag in games is as inevitable as taxes. It’s something we can try to minimize, but we always need to live with it. Earlier this week, I noticed that input for my new iPad game was very laggy. Excessively so, to the point it was really detracting from the game, so I decided I had to look into it a bit more.

Lag In Games

I’m defining lag as the time elapsed between the moment the player performs an input action (press a button, touch the screen, move his finger), until the game provides some feedback for that input (movement, flash behind a button, sound effect).

I’m defining lag as the time elapsed between the moment the player performs an input action (press a button, touch the screen, move his finger), until the game provides some feedback for that input (movement, flash behind a button, sound effect).

Mick West wrote a great article on the causes of lag in games, followed up by another one in how to measure it. I’m going to apply some of that to the lag I was experiencing in my game.

Lag can be introduced in games by many different factors:

- Delay between gathering input and delivering it to the game.

- Delay rendering simulation state.

- Delay displaying the latest rendered state on screen.

The new game runs on the iPad and involves moving objects around the screen with your finger. To make sure it wasn’t anything weird with the rest of the game code, I wrote a quick (and ugly!) program that draws a square with OpenGL that follows your finger on the screen. When you run the sample, the same lag becomes immediately obvious.

The iPad is a much larger device than the iPhone, and it encourages a physical metaphor even more. As soon as you attempt to move an “object” on screen, the lag kills that sense of physicality. Instead of moving an object around with your finger, you’re dragging it around with a rubber band. It moved the player from applying direct action on screen, to being removed and disassociated with the actions on screen.

Loop Structure

The place to start looking for lag is in my main loop. The main loop looks something like this:

ProcessInput(); UpdateSimulation(); RenderWorld(); PresentRenderBuffer();

So I was reading the input correctly before the simulation. Nothing weird there.

Touch input is delivered to the code as events from the OS. Whenever I received those events (outside of the main loop), I queue them, and then process them all whenever the main loop starts in ProcessInput().

The loop runs at 60Hz, so the lag here is at most 16.7 ms (if you’re running at 30Hz, then you’re looking at a delay up to 33.3ms). Unfortunately, the lag I was seeing in the game was way more than one frame, so there was to be something else.

Rendering

For some reason, I thought that iDevices were triple buffered. I ran some tests and fortunately it looks like it’s regular double buffering. That means that if I render a frame and call presentRenderBuffer(), the results of that render will be visible on screen at the next vertical sync interval. I’m sure there’s a slight lag with the iPad screen, but I’m willing to be is close to negligible when we’re talking about milliseconds, so we’ll call that zero.

Main Loop Calls

The game uses CADisplayLink with interval of 1, so the main loop is called once every 16.7 ms (give or take a fraction of ms). I thought that perhaps CADisplayLink wasn’t playing well with touch events, so I tried switching to NSTimer, and even to my old thread-driven main loop, but none of it seemed to make any difference. Lag was alive and well as always.

That the simulation and rendering in the game are very fast, probably just a few ms. That means the rest of the system has plenty of time to process events. If I had a full main loop, maybe one of the two other approaches would have made a difference.

It looks like the lag source had to be further upstream.

Input Processing

On the dashboard, press and hold on an icon, now move it around the screen. That’s the same kind of lag we have in the sample program! That’s not encouraging.

A touch screen works as a big matrix of touch sensors. The Apple OS processes that input grid and tries to make sense out of it by figuring out where the touches are. The iOS functions eventually process that grid, and send our programs the familiar touchesBegan, touchesMoved, etc events. That’s not easy task by any means. It’s certainly not like processing mouse input, which is discrete and very clearly defined. For example, you can put your whole palm down on the screen. Where are the touches exactly?

TouchesBegan is actually a reasonably easy one. That’s why you see very little lag associated with that one. Sensors go from having no touch values, to going over a certain threshold. I’m sure that as soon as one or two of them cross that threshold, the OS identifies that as a touch and sends up the began event.

TouchesMoved is a lot more problematic. What constitutes a touch moving? You need to detect the area in the sensor grid that is activated, and you need to detect a pattern of movement and find out a new center for it. In order to do that, you’ll need several samples and a fair amount of CPU cycles to perform some kind of signal processing on the inputs. That extra CPU usage is probably the reason why some games get choppier as soon as you touch the screen.

Measuring Lag

Measuring lag in a game is tricky. You usually can’t measure it from within the code, so you need to resort to external means like Mick did in his tests.

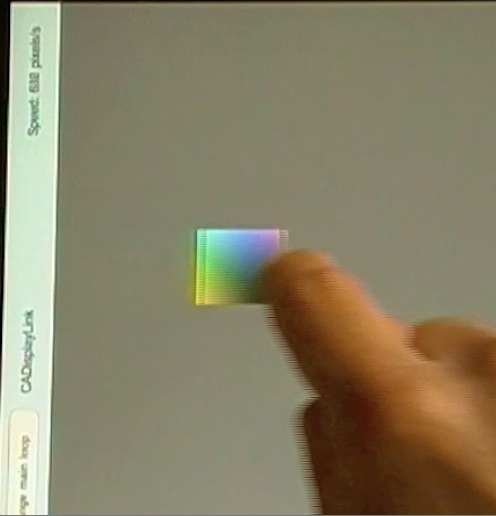

I decided to do something similar. I pulled out my digital video camera, and started recording my finger moving on the screen. The quality leaves much to be desired, but it’s good enough for the job. I can see how far my finger gets from the center of the square, but that’s not enough information to quantify the lag. How fast is my finger moving exactly? Fortunately, that’s something I can answer in code, so I added that information to the screen [1]. Now, for a given frame, I can see both how far the finger is from the center of the square and how fast it’s going.

The square is 100 pixels wide. When I move my finger at about 500 pixels per second, the center of my finger is on the edge of the square. That makes a rough 100 ms total delay from input until it’s rendered. That’s a whopping 6 full frames at 60 fps!

What Can We Do About It

As iOS developers, there isn’t much we can do. Make sure your loops are set up correctly to avoid an extra frame delay. Make sure you provide feedback as soon as you can and don’t delay it any longer than you have to. Other than that, there’s nothing much we can do.

I’ve been saying this for a while, but I’m a big fan of layers as long as you can get to the underlying layers when you need to. Here’s a perfect case where it would be fantastic if Apple gave us raw access to the touch matrix input. Apart from being able to process the input faster (because I know what kind of input to expect for the game), can you imagine the possibilities that would open up? Input wouldn’t be limited to touch events, and we could even sense how “hard” the user is pushing, or the shape of the push.

At the very least, it would be very useful if we had the option to allocate extra CPU cycles to input recognition. I’m not doing much in my game while this is going on, so I’d happily give the input recognition code 95% of the frame time if it means it can give me those events in half the time.

I’m hoping that in a not very far distant, iDevices come with multiple cores, and maybe one of those cores is dedicated to the OS and to do input recognition without affecting the game. Or maybe, since that’s such a specialized, data-intensive task, some custom hardware could do the job much faster.

Until then, we’ll just have to deal with massive input lag.

How about you? Do you have some technique that can reduce the touch event lag?

LagTest source code. Released under the MIT License, yadda, yadda, yadda…

[1] I actually shrank the OpenGL view to make sure the label wasn’t on top if it because I was getting choppier input than usual. Even moving it there caused some choppiness. This is exactly what I saw last year with OpenGL performance dropping when a label is updated every frame!

This post is part of iDevBlogADay, a group of indie iPhone development blogs featuring two posts per day. You can keep up with iDevBlogADay through the web site, RSS feed, or Twitter.

New blog post: Lag: The Bane Of Touch Screens http://gamesfromwithin.com/lag-the-bane-… #idevblogaday

@SnappyTouch You care about touch lag?? Just wait till you get ‘in touch’ with broken touch screens of Android devices 🙂

@SnappyTouch excellent post! Very interesting, and unhappy 🙁 Maybe predictive moving of the object based on time+dist between touch evts?

@SnappyTouch Interesting post, I wonder if http://translate.google.co.uk/translate?… found the touch input data in RAM? It must be there somewhere!

@SnappyTouch so you could say that you would prefer a… *snappier* touch?

@SnappyTouch I still think ur wrong. I think this is an intentional behavior of the touch events. Several things don’t match a “lag” theory

@SnappyTouch like: move a springboard icon slowly(!) around in a circle. It shows the “lag” much more visibly than necessary

@SnappyTouch I actually think it is a beautiful physicality that is abstracted with this: the rubberband. on purpose. it feels right, no?

@MarkusN I still think it’s not on purpose. Nobody wants lag if you can have direct control over object. Much more physical.

@MarkusN Moving icon slowly, you still see about 1/10th sec delay I think. It’ll take a lot of beer to convince me 🙂

@SnappyTouch ok, I will challenge you with this one: If it was a lag and you’d move your finger in a straight line, stop and reverse…

@SnappyTouch scratch my last attempt. I will show you tonight. and try the beer thing 🙂

@MarkusN Sounds good 🙂

@SnappyTouch the reason there are no complaints? that drag method feels natural in 99% of all games. touchesended makes sure you arrive ok

@SnappyTouch and I will so be quiet now. will make you drunk tonight until you believe 😀

@SnappyTouch don’t use the x,y of a moving contact directly, instead sample touch velocity between two move events and use dead reckoning

@SnappyTouch then tween to touch removed x, y. I have had good results with this technique

I like your method of measuring the drag-lag. I think this is one thing it would be great for Apple to improve in later products, as it would greatly improve the user experience for painting and sketching apps.

Rather interesting the iPhone keyboard seems to have a lag delay that is 1 or two frames less than the rest of the UI (testing on an iPhone 4). Using the frame counting method it seems like the keyboard (i.e. the delay between touching a key and the key starting to expand) is about 4/60ths and the normal UI elements are 6/60ths. So it’s quite possible that Apple are introducing an extra unnecessary frame or two of lag in their OS – that might be also found in the OpenGL loop, which kind of sits on top of the OS.

Very nice post. Actualy I remember that John Carmack did mention in the readme that accompanied Wolf3d for iPhone, that apple should give us access to the lower level touch handling system to avoid all this overhead…

Having coded a “driver” for the Koala Pad for the Apple II, Atari and C64 back in the 80’s… my theory is that the lag is actually averaging of noisy data from the touch screen hardware.

Do you sleep at all as part of your main loop? Try adding sleep(1 millisecond) there. In one particular case we solved one nasty touch-drag-lagginess issue just by doing that, although that case was worse than you describe.

I played around with this myself a few months ago and I think it’s actually the input smoothing apple is doing rather than lag that causes this; one good indicator that this is the case is that if you remove your finger from the display the touchEnd event is sent instantly, rather than being delay X frames; also moving your finger swiftly then stopping doesn’t result in move events that replicate that, instead you get move events that gradually come to rest.

Great article Noel! And thank you for the tips! 🙂

Lags actually exist on touchscreen mobile devices since the WinCE days and I bet Apple’s touchscreen, being multitouch adds to the delay. Were you able to try your measurement app on multitouch?

At any rate, lags are probably there to keep us all “creative” and “innovative” with what we do. 🙂

In MotionInk I use touch to specify to amount of ink that is flowing from the brush. I simulate pressure with small changes in the distance of touch move points from the touch begin point. I was processing the input at the framerate of the update loop. Bad ju ju! In the end I removed the touch processing from the loop let it run at the touch move refresh rate and had the paint respond to that within the loop. It would be outstanding if Apple would allow drill down to the raw touches data! I could then more accurately simulate pressure.

I’ve been experimenting with using the touch velocity rather than position too, like samuraidan mentioned above. I’m having to limit how much extrapolation to use and also to extrapolate less if it looks like the finger is slowing down to prevent too much overshoot when the finger stops. With this I can keep a box much closer to the finger, even a really fast one. The motion is a bit jerky, but it works, and for our game prototype it’s actually an invisible collider that needs to track a fast moving finger, so if I tweak the object mechanics right I think it can work. This will make or break the game though (so obviously its the first thing to prototype).

There’s an artificial delay between -touchesBegan:withEvent: and touchesMoved:withEvent: of approx. 250 msec.

The reason is that iOS tries to wait if the user drags his finger far enough to consider it a “move”. However, in my experience, this has a big negative impact on the user experience because in many cases it’s just ridiculous to wait. More often than not waiting to see if it’s a move is nonsense. In older iOS versions you could feel the huge difference it makes by tapping a portion of the screen and then starting to drag something. When you touched a portion of the screen before beginning to drag with another finger, the delay was disabled. It blew my mind how fast and smooth the UI responded to the dragging intent. Unfortunately – as of iOS 5.1 – there is no easy way to disable this delay.

Thank you for the article.

Same problem here, even in the current devices ipad4(here in the case) the problem has not been solved yet. Very frustrating to see lag but we can only minimize the lag.