Picture this: Toward the end of the development cycle, your game crawls, but you don’t see any obvious hotspots in the profiler. The culprit? Random memory access patterns and constant cache misses. In an attempt to improve performance, you try to parallelize parts of the code, but it takes heroic efforts, and, in the end, you barely get much of a speed-up due to all the synchronization you had to add. To top it off, the code is so complex that fixing bugs creates more problems, and the thought of adding new features is discarded right away. Sound familiar?

That scenario pretty accurately describes almost every game I’ve been involved with for the last 10 years. The reasons aren’t the programming languages we’re using, nor the development tools, nor even a lack of discipline. In my experience, it’s object- oriented programming (OOP) and the culture that surrounds it that is in large part to blame for those problems. OOP could be hindering your project rather than helping it!

It’s All About Data

OOP is so ingrained in the current game development culture that it’s hard to think beyond objects when thinking about a game. After all, we’ve been creating classes representing vehicles, players, and state machines for many years. What are the alternatives? Procedural programming? Functional languages? Exotic programming languages?

Data-oriented design is a different way to approach program design that addresses all these problems. Procedural programming focuses on procedure calls as its main element, and OOP deals primarily with objects. Notice that the main focus of both approaches is code: plain procedures (or functions) in one case, and grouped code associated with some internal state in the other. Data-oriented design shifts the perspective of programming from objects to the data itself: The type of the data, how it is laid out in memory, and how it will be read and processed in the game.

Programming, by definition, is about transforming data: It’s the act of creating a sequence of machine instructions describing how to process the input data and create some specific output data. A game is nothing more than a program that works at interactive rates, so wouldn’t it make sense for us to concentrate primarily on that data instead of on the code that manipulates it?

I’d like to clear up potential confusion and stress that data-oriented design does not imply that something is data- driven. A data-driven game is usually a game that exposes a large amount of functionality outside of code and lets the data determine the behavior of the game. That is an orthogonal concept to data-oriented design, and can be used with any type of programming approach.

Ideal Data

If we look at a program from the data point of view, what does the ideal data look like? It depends on the data and how it’s used. In general, the ideal data is in a format that we can use with the least amount of effort. In the best case, the format will be the same we expect as an output, so the processing is limited to just copying that data. Very often, our ideal data layout will be large blocks of contiguous, homogeneous data that we can process sequentially. In any case, the goal is to minimize the amount of transformations, and whenever possible, you should bake your data into this ideal format offline, during your asset-building process.

Because data-oriented design puts data first and foremost, we can architect our whole program around the ideal data format. We won’t always be able to make it exactly ideal (the same way that code is hardly ever by-the-book OOP), but it’s the primary goal to keep in mind. Once we achieve that, most of the problems I mentioned at the beginning of the column tend to melt away (more about that in the next section).

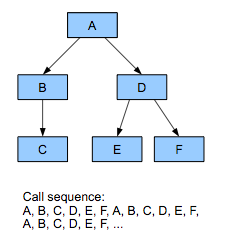

When we think about objects, we immediately think of trees— inheritance trees, containment trees, or message-passing trees, and our data is naturally arranged that way. As a result, when we perform an operation on an object, it will usually result in that object in turn accessing other objects further down in the tree. Iterating over a set of objects performing the same operation generates cascading, totally different operations at each object (see Figure 1a).

To achieve the best possible data layout, it’s helpful to break down each object into the different components, and group components of the same type together in memory, regardless of what object they came from. This organization results in large blocks of homogeneous data, which allow us to process the data sequentially (see Figure 1b). A key reason why data-oriented design is so powerful is because it works very well on large groups of objects. OOP, by definition, works on a single object. Step back for a minute and think of the last game you worked on: How many places in the code did you have only one of something? One enemy? One vehicle? One pathfinding node? One bullet? One particle? Never! Where there’s one, there are many. OOP ignores that and deals with each object in isolation. Instead, we can make things easy for us and for the hardware and organize our data to deal with the common case of having many items of the same type.

Does this sound like a strange approach? Guess what? You’re probably already doing this in some parts of your code: The particle system! Data-oriented design is turning our whole codebase into a gigantic particle system. Perhaps a name for this approach that would be more familiar to game programmers would have been particle-driven programming.

Advantages of Data-Oriented Design

hinking about data first and architecting the program based on that brings along lots of advantages.

Parallelization.

These days, there’s no way around the fact that we need to deal with multiple cores. Anyone who has tried taking some OOP code and parallelizing it can attest how difficult, error prone, and possibly not very efficient that is. Often you end up adding lots of synchronization primitives to prevent concurrent access to data from multiple threads, and usually a lot of the threads end up idling for quite a while waiting for other threads to complete. As a result, the performance improvement can be quite underwhelming.

When we apply data-oriented design, parallelization becomes a lot simpler: We have the input data, a small function to process it, and some output data. We can easily take something like that and split it among multiple threads with minimal synchronization between them. We can even take it further and run that code on processors with local memory (like the SPUs on the Cell processor) without having to do anything differently.

Cache utilization.

In addition to using multiple cores, one of the keys to achieving great performance in modern hardware, with its deep instruction pipelines and slow memory systems with multiple levels of caches, is having cache-friendly memory access. Data-oriented design results in very efficient use of the instruction cache because the same code is executed over and over. Also, if we lay out the data in large, contiguous blocks, we can process the data sequentially, getting nearly perfect data cache usage and great performance. Possible optimizations. When we think of objects or functions, we tend to get stuck optimizing at the function or even the algorithm level; Reordering some function calls, changing the sort method, or even re-writing some C code with assembly.

That kind of optimization is certainly beneficial, but by thinking about the data first we can step further back and make larger, more important optimizations. Remember that all a game does is transform some data (assets, inputs, state) into some other data (graphics commands, new game states). By keeping in mind that flow of data, we can make higher-level, more intelligent decisions based on how the data is transformed, and how it is used. That kind of optimization can be extremely difficult and time- consuming to implement with more traditional OOP methods.

Modularity.

So far, all the advantages of data-oriented design have been based around performance: cache utilization, optimizations, and parallelization. There is no doubt that as game programmers, performance is an extremely important goal for us. There is often a conflict between techniques that improve performance and techniques that help readability and ease of development. For example, re-writing some code in assembly language can result in a performance boost, but usually makes the code harder to read and maintain.

Fortunately, data-oriented design is beneficial to both performance and ease of development. When you write code specifically to transform data, you end up with small functions, with very few dependencies on other parts of the code. The codebase ends up being very “flat,†with lots of leaf functions without many dependencies. This level of modularity and lack of dependences makes understanding, replacing, and updating the code much easier.

Testing.

The last major advantage of data-oriented design is ease of testing. As we saw in the June and August Inner Product columns, writing unit tests to check object interactions is not trivial. You need to set up mocks and test things indirectly. Frankly, it’s a bit of a pain. On the other hand, when dealing directly with data, it couldn’t be easier to write unit tests: Create some input data, call the transform function, and check that the output data is what we expect. There’s nothing else to it. This is actually a huge advantage and makes code extremely easy to test, whether you’re doing test-driven development or just writing unit tests after the code.

Drawbacks of Data-Oriented Design

Data-oriented design is not the silver bullet to all the problems in game development. It does help tremendously writing high-performance code and making programs more readable and easier to maintain, but it does come with a few drawbacks of its own.

The main problem with data-oriented design is that it’s different from what most programmers are used to or learned in school. It requires turning our mental model of the program ninety degrees and changing how we think about it. It takes some practice before it becomes second-nature.

Also, because it’s a different approach, it can be challenging to interface with existing code, written in a more OOP or procedural way. It’s hard to write a single function in isolation, but as long as you can apply data-oriented design to a whole subsystem you should be able to reap a lot of the benefits.

Applying Data-Oriented Design

Enough of the theory and overview. How do you actually get started with data-oriented design? To start with, just pick a specific area in your code: navigation, animations, collisions, or something else. Later on, when most of your game engine is centered around the data, you can worry about data flow all the way from the start of a frame until the end.

The next step is to clearly identify the data inputs required by the system, and what kind of data it needs to generate. It’s OK to think about it in OOP terms for now, just to help us identify the data. For example, in an animation system, some of the input data is skeletons, base poses, animation data, and current state. The result is not “the code plays animations,†but the data generated by the animations that are currently playing. In this case, our outputs would be a new set of poses and an updated state.

It’s important to take a step further and classify the input data based on how it is used. Is it read- only, read-write, or write-only? That classification will help guide design decisions about where to store it, and when to process it depending on dependencies with other parts of the program.

At this point, stop thinking of the data required for a single operation, and think in terms of applying it to dozens or hundreds of entries. We no longer have one skeleton, one base pose, and a current state, and instead we have a block of each of those types with many instances in each of the blocks.

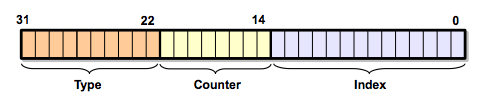

Think very carefully how the data is used during the transformation process from input to output. You might realize that you need to scan a particular field in a structure to perform a pass on the data, and then you need to use the results to do another pass. In that case, it might make more sense to split that initial field into a separate block of memory that can be processed independently, allowing for better cache utilization and potential parallelization. Or maybe you need to vectorize some part of the code, which requires fetching data from different locations to put it in the same vector register. In that case, that data can be stored contiguously so vector operations can be applied directly, without any extra transformations.

Now you should have a very good understanding of your data. Writing the code to transform it is going to be much simpler. It’s like writing code by filling in the blanks. You’ll even be pleasantly surprised to realize that the code is much simpler and smaller than you thought in the first place, compared to what the equivalent OOP code would have been.

If you think back about most of the topics we’ve covered in this column over the last year, you’ll see that they were all leading toward this type of design. Now it’s the time to be careful about how the data is aligned (Dec 2008 and Jan 2009), to bake data directly into an input format that you can use efficiently (Oct and Nov 2008), or to use non- pointer references between data blocks so they can be easily relocated (Sept 2009).

Is Thre Room For OOP?

Does this mean that OOP is useless and you should never apply it in your programs? I’m not quite ready to say that. Thinking in terms of objects is not detrimental when there is only one of each object (a graphics device, a log manager, etc) although in that case you might as well write it with simpler C-style functions and file-level static data. Even in that situation, it’s still important that those objects are designed around transforming data.

Another situation where I still find myself using OOP is GUI systems. Maybe it’s because you’re working with a system that is already designed in an object-oriented way, or maybe it’s because performance and complexity are not crucial factors with GUI code. In any case, I much prefer GUI APIs that are light on inheritance and use containment as much as possible (Cocoa and CocoaTouch are good examples of this). It’s very possible that a data-oriented GUI system could be written for games that would be a pleasure to work with, but I haven’t seen one yet.

Finally, there’s nothing stopping you from still having a mental picture of objects if that’s the way you like to think about the game. It’s just that the enemy entity won’t be all in the same physical location in memory. Instead, it will be split up into smaller subcomponents, each one forming part of a larger data table of similar components.

Data-oriented design is a bit of a departure from traditional programming approaches, but by always thinking about the data and how it needs to be transformed, you’ll be able to reap huge benefits both in terms of performance and ease of development.

Thanks to Mike Acton and Jim Tilander for challenging my ideas over the years and for their feedback on this article.

Picture this: Toward the end of the development cycle, your game crawls, but you don’t see any obvious hotspots in the profiler. The culprit? Random memory access patterns and constant cache misses. In an attempt to improve performance, you try to parallelize parts of the code, but it takes heroic efforts, and, in the end, you barely get much of a speed-up due to all the synchronization you had to add. To top it off, the code is so complex that fixing bugs creates more problems, and the thought of adding new features is discarded right away. Sound familiar?

That scenario pretty accurately describes almost every game I’ve been involved with for the last 10 years. The reasons aren’t the programming languages we’re using, nor the development tools, nor even a lack of discipline. In my experience, it’s object- oriented programming (OOP) and the culture that surrounds it that is in large part to blame for those problems. OOP could be hindering your project rather than helping it!

It’s All About Data

OOP is so ingrained in the current game development culture that it’s hard to think beyond objects when thinking about a game. After all, we’ve been creating classes representing vehicles, players, and state machines for many years. What are the alternatives? Procedural programming? Functional languages? Exotic programming languages?

Data-oriented design is a different way to approach program design that addresses all these problems. Procedural programming focuses on procedure calls as its main element, and OOP deals primarily with objects. Notice that the main focus of both approaches is code: plain procedures (or functions) in one case, and grouped code associated with some internal state in the other. Data-oriented design shifts the perspective of programming from objects to the data itself: The type of the data, how it is laid out in memory, and how it will be read and processed in the game.

Programming, by definition, is about transforming data: It’s the act of creating a sequence of machine instructions describing how to process the input data and create some specific output data. A game is nothing more than a program that works at interactive rates, so wouldn’t it make sense for us to concentrate primarily on that data instead of on the code that manipulates it?

I’d like to clear up potential confusion and stress that data-oriented design does not imply that something is data- driven. A data-driven game is usually a game that exposes a large amount of functionality outside of code and lets the data determine the behavior of the game. That is an orthogonal concept to data-oriented design, and can be used with any type of programming approach.

Ideal Data

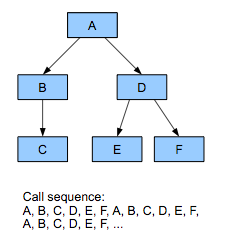

Figure 1a. Call sequence with an object-oriented approach

If we look at a program from the data point of view, what does the ideal data look like? It depends on the data and how it’s used. In general, the ideal data is in a format that we can use with the least amount of effort. In the best case, the format will be the same we expect as an output, so the processing is limited to just copying that data. Very often, our ideal data layout will be large blocks of contiguous, homogeneous data that we can process sequentially. In any case, the goal is to minimize the amount of transformations, and whenever possible, you should bake your data into this ideal format offline, during your asset-building process.

Because data-oriented design puts data first and foremost, we can architect our whole program around the ideal data format. We won’t always be able to make it exactly ideal (the same way that code is hardly ever by-the-book OOP), but it’s the primary goal to keep in mind. Once we achieve that, most of the problems I mentioned at the beginning of the column tend to melt away (more about that in the next section).

When we think about objects, we immediately think of trees— inheritance trees, containment trees, or message-passing trees, and our data is naturally arranged that way. As a result, when we perform an operation on an object, it will usually result in that object in turn accessing other objects further down in the tree. Iterating over a set of objects performing the same operation generates cascading, totally different operations at each object (see Figure 1a).

Figure 1b. Call sequence with a data-oriented approach

To achieve the best possible data layout, it’s helpful to break down each object into the different components, and group components of the same type together in memory, regardless of what object they came from. This organization results in large blocks of homogeneous data, which allow us to process the data sequentially (see Figure 1b). A key reason why data-oriented design is so powerful is because it works very well on large groups of objects. OOP, by definition, works on a single object. Step back for a minute and think of the last game you worked on: How many places in the code did you have only one of something? One enemy? One vehicle? One pathfinding node? One bullet? One particle? Never! Where there’s one, there are many. OOP ignores that and deals with each object in isolation. Instead, we can make things easy for us and for the hardware and organize our data to deal with the common case of having many items of the same type.

Does this sound like a strange approach? Guess what? You’re probably already doing this in some parts of your code: The particle system! Data-oriented design is turning our whole codebase into a gigantic particle system. Perhaps a name for this approach that would be more familiar to game programmers would have been particle-driven programming.

Advantages of Data-Oriented Design

Thinking about data first and architecting the program based on that brings along lots of advantages.

Parallelization.

These days, there’s no way around the fact that we need to deal with multiple cores. Anyone who has tried taking some OOP code and parallelizing it can attest how difficult, error prone, and possibly not very efficient that is. Often you end up adding lots of synchronization primitives to prevent concurrent access to data from multiple threads, and usually a lot of the threads end up idling for quite a while waiting for other threads to complete. As a result, the performance improvement can be quite underwhelming.

When we apply data-oriented design, parallelization becomes a lot simpler: We have the input data, a small function to process it, and some output data. We can easily take something like that and split it among multiple threads with minimal synchronization between them. We can even take it further and run that code on processors with local memory (like the SPUs on the Cell processor) without having to do anything differently.

Cache utilization.

In addition to using multiple cores, one of the keys to achieving great performance in modern hardware, with its deep instruction pipelines and slow memory systems with multiple levels of caches, is having cache-friendly memory access. Data-oriented design results in very efficient use of the instruction cache because the same code is executed over and over. Also, if we lay out the data in large, contiguous blocks, we can process the data sequentially, getting nearly perfect data cache usage and great performance. Possible optimizations. When we think of objects or functions, we tend to get stuck optimizing at the function or even the algorithm level; Reordering some function calls, changing the sort method, or even re-writing some C code with assembly.

That kind of optimization is certainly beneficial, but by thinking about the data first we can step further back and make larger, more important optimizations. Remember that all a game does is transform some data (assets, inputs, state) into some other data (graphics commands, new game states). By keeping in mind that flow of data, we can make higher-level, more intelligent decisions based on how the data is transformed, and how it is used. That kind of optimization can be extremely difficult and time- consuming to implement with more traditional OOP methods.

Modularity.

So far, all the advantages of data-oriented design have been based around performance: cache utilization, optimizations, and parallelization. There is no doubt that as game programmers, performance is an extremely important goal for us. There is often a conflict between techniques that improve performance and techniques that help readability and ease of development. For example, re-writing some code in assembly language can result in a performance boost, but usually makes the code harder to read and maintain.

Fortunately, data-oriented design is beneficial to both performance and ease of development. When you write code specifically to transform data, you end up with small functions, with very few dependencies on other parts of the code. The codebase ends up being very “flat,†with lots of leaf functions without many dependencies. This level of modularity and lack of dependences makes understanding, replacing, and updating the code much easier.

Testing.

The last major advantage of data-oriented design is ease of testing. As we saw in the June and August Inner Product columns, writing unit tests to check object interactions is not trivial. You need to set up mocks and test things indirectly. Frankly, it’s a bit of a pain. On the other hand, when dealing directly with data, it couldn’t be easier to write unit tests: Create some input data, call the transform function, and check that the output data is what we expect. There’s nothing else to it. This is actually a huge advantage and makes code extremely easy to test, whether you’re doing test-driven development or just writing unit tests after the code.

Drawbacks of Data-Oriented Design

Data-oriented design is not the silver bullet to all the problems in game development. It does help tremendously writing high-performance code and making programs more readable and easier to maintain, but it does come with a few drawbacks of its own.

The main problem with data-oriented design is that it’s different from what most programmers are used to or learned in school. It requires turning our mental model of the program ninety degrees and changing how we think about it. It takes some practice before it becomes second-nature.

Also, because it’s a different approach, it can be challenging to interface with existing code, written in a more OOP or procedural way. It’s hard to write a single function in isolation, but as long as you can apply data-oriented design to a whole subsystem you should be able to reap a lot of the benefits.

Applying Data-Oriented Design

Enough of the theory and overview. How do you actually get started with data-oriented design? To start with, just pick a specific area in your code: navigation, animations, collisions, or something else. Later on, when most of your game engine is centered around the data, you can worry about data flow all the way from the start of a frame until the end.

The next step is to clearly identify the data inputs required by the system, and what kind of data it needs to generate. It’s OK to think about it in OOP terms for now, just to help us identify the data. For example, in an animation system, some of the input data is skeletons, base poses, animation data, and current state. The result is not “the code plays animations,†but the data generated by the animations that are currently playing. In this case, our outputs would be a new set of poses and an updated state.

It’s important to take a step further and classify the input data based on how it is used. Is it read- only, read-write, or write-only? That classification will help guide design decisions about where to store it, and when to process it depending on dependencies with other parts of the program.

At this point, stop thinking of the data required for a single operation, and think in terms of applying it to dozens or hundreds of entries. We no longer have one skeleton, one base pose, and a current state, and instead we have a block of each of those types with many instances in each of the blocks.

Think very carefully how the data is used during the transformation process from input to output. You might realize that you need to scan a particular field in a structure to perform a pass on the data, and then you need to use the results to do another pass. In that case, it might make more sense to split that initial field into a separate block of memory that can be processed independently, allowing for better cache utilization and potential parallelization. Or maybe you need to vectorize some part of the code, which requires fetching data from different locations to put it in the same vector register. In that case, that data can be stored contiguously so vector operations can be applied directly, without any extra transformations.

Now you should have a very good understanding of your data. Writing the code to transform it is going to be much simpler. It’s like writing code by filling in the blanks. You’ll even be pleasantly surprised to realize that the code is much simpler and smaller than you thought in the first place, compared to what the equivalent OOP code would have been.

If you think back about most of the topics we’ve covered in this column over the last year, you’ll see that they were all leading toward this type of design. Now it’s the time to be careful about how the data is aligned (Dec 2008 and Jan 2009), to bake data directly into an input format that you can use efficiently (Oct and Nov 2008), or to use non- pointer references between data blocks so they can be easily relocated (Sept 2009).

Is There Room For OOP?

Does this mean that OOP is useless and you should never apply it in your programs? I’m not quite ready to say that. Thinking in terms of objects is not detrimental when there is only one of each object (a graphics device, a log manager, etc) although in that case you might as well write it with simpler C-style functions and file-level static data. Even in that situation, it’s still important that those objects are designed around transforming data.

Another situation where I still find myself using OOP is GUI systems. Maybe it’s because you’re working with a system that is already designed in an object-oriented way, or maybe it’s because performance and complexity are not crucial factors with GUI code. In any case, I much prefer GUI APIs that are light on inheritance and use containment as much as possible (Cocoa and CocoaTouch are good examples of this). It’s very possible that a data-oriented GUI system could be written for games that would be a pleasure to work with, but I haven’t seen one yet.

Finally, there’s nothing stopping you from still having a mental picture of objects if that’s the way you like to think about the game. It’s just that the enemy entity won’t be all in the same physical location in memory. Instead, it will be split up into smaller subcomponents, each one forming part of a larger data table of similar components.

Data-oriented design is a bit of a departure from traditional programming approaches, but by always thinking about the data and how it needs to be transformed, you’ll be able to reap huge benefits both in terms of performance and ease of development.

Thanks to Mike Acton and Jim Tilander for challenging my ideas over the years and for their feedback on this article.

This article was originally printed in the September 2009 issue of Game Developer.

Here’s a Korean translation of this article by Hakkyu Kim.

The basics of mocking objects are as simple as what we’ve seen. Armed with that knowledge, you can go ahead and test all the object interactions in your code. However, I bet that you’re going to get tired quickly from all that typing every time you create a new mock. The bigger and more complex the object is, the more tedious the operation becomes. That’s where a mocking framework comes in.

The basics of mocking objects are as simple as what we’ve seen. Armed with that knowledge, you can go ahead and test all the object interactions in your code. However, I bet that you’re going to get tired quickly from all that typing every time you create a new mock. The bigger and more complex the object is, the more tedious the operation becomes. That’s where a mocking framework comes in.

If you haven’t read it already on Game Developer Magazine, don’t miss the

If you haven’t read it already on Game Developer Magazine, don’t miss the