They say good things come in small packages. That was certainly true of this year’s Game Tech Seminars. It was a four-day intensive conference dealing with very specific topics (realistic characters and engine/tools technology) with a very impressive list of speakers. The best part though, was the attendees. There were only about 80 people total in the conference (due in no small part to the hefty price tag attached to the conference, I’m sure), and they were mostly tech leads or directors of technology of their companies. It was great to see lots of familiar faces, but also a lot of new ones I only knew through the Internet or not at all before. The great discussions following each session, or even over lunch or dinner were worth the price of admission alone.

I’ve been saying for a while that the “next frontier†of game technology is going to be characters. Not fancy graphics. Not physics. Not online play. We can now create worlds that have exquisite lighting, fancy details, and complex environments. And we can do it in real time too. Sometimes you start to wonder if we’re really approaching movie-level quality. Then a character walks in and that illusion is permanently destroyed: the feet slide as the character slows down, the animation pops as it transitions between different poses, the arm interpenetrates the table when he leans on it, the skin looks more like paper, and the hair look like it has been plastered down with super glue. In other words, the character has less charm than a zombie straight out of a B-horror movie.

It is no surprise then that the first two days of the GameTech seminars were dedicated to characters. It approached the problem from two different angles. First it examined how we can try to make better looking characters. Then it addressed the question of making them move in a more realistic way.

It started by covering some of the latest advances in hair and skin rendering from the Siggraph and special effects crowd. When we’re talking about physically rendering and simulating each individual hair as a link of 10 or more jointed segments, you know we’re way beyond what’s currently possible in games. Still, it’s a glimpse of where we’re heading in a few years down the pipe. In the meanwhile, understanding the full simulation in detail might help us come up with shortcuts and tricks that look somewhat like the real thing.

The skin rendering part was much more down to earth. Even when it was approached from the movie production point of view, what they’re doing is not as far from what could be possible soon. As a matter of fact, they are already way past the point of diminishing returns in regards to quality improvements. Some of the models for subsurface scattering presented only produced extremely subtle changes (I wouldn’t even be able to call them improvements, although I’m sure they look closer to the reference photographs).

ATI showed off their newest Ruby demo. I really was expecting more by way of character animation, but they had some pretty fancy lighting which used pre-computed lighting sampled at a bunch of points in the environment (along a couple of splines in the tunnel), and applied in real time to the moving characters. For skin rendering, they are just blurring the diffuse lighting to fake subsurface scattering. Interestingly, they’re using a shader that does a Poisson disk filter, which seems to be popping up everywhere you look nowadays (depth of field, shadows, subsurface scattering,…). A few years ago, shiny bumpy things was the in thing. Now it’s blurry diffuse effects.

The animation section is where things really started getting interesting. We all know that the days of pure pre-canned animation playback are numbered. So far we’ve only been making attempts at correcting grab animations, or to plant the feet on the ground with IK. But there’s more that we can do. Much more.

Lucas Kovar and Michael Gleicher presented some of their research in the area of data-driven character motion. The results were absolutely stunning. They approached the problem of creating new animations in a variety of ways, but they all had in common that they were creating new animations based on captured animation data. One approach looked at a bunch of motion data and created animation trees from it with nearly seamless transitions. For anybody who has tried putting together a simple animation tree for a third-person game, that produced really amazing results (although they were also using really complex animation “treesâ€).

The parametrized motion project was really impressive and I think has a lot of future in game development. It synthesized new animations based on existing similar animations. So out of a few walking, turning, and jumping cycles, it could generate a new set of animations to adapt themselves to the current game environment. It was also demonstrated with more complex animations like martial arts movements or even cartwheels. At the heart of this approach is the idea of match webs, which are a data structure used to find potential matches to similar animations. The applications to offline animation authoring are obvious, but I really think it’s possible to apply it at runtime as well. They were able to show some impressive results with just a few animation data sets.

The next day, David Wu approached the problem of adapting animations to the environment by trying to solve the physical constraints. While it might be a reasonable approach to “fix†animations or to make minor tweaks, it really didn’t show the potential of the data-driven animation approach. The most interesting thing I got out of David’s talk was his comment about how many game developers lack an understanding of what’s going under the hood in middleware packages (especially for physics) and so they can’t take full advantage of its capabilities. I don’t think that’s true for something like graphics or sound. I wonder if that means we haven’t exposed the right interface to physics middleware packages. Or maybe physics is more complex and more tightly coupled to the rest of the game. David went on to present what he considers is going to be the future interface of physics (he compared it to the advances that computer graphics made once we all agreed on using the polygon as a basic primitive).

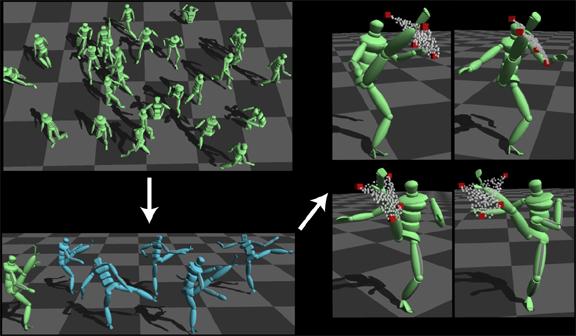

Finally, a third way of approaching animation synthesis was presented by Torsten Reil, from Natural Motion. He tackled the problem by using a set of controllers and running it through a set of genetic algorithms to develop the motion he wanted out of them. Unfortunately, it felt that he didn’t want to get into many details when he was asked questions from the audience, probably because of patent issues and the fact that Natural Motion is selling a product based on that research. Because of that, it felt a bit like a sales pitch “Look at this cool tech. Now come buy our tool that does it all for you.†A lot of the demos showed used a combination of genetic algorithms to derive the controllers, plus some artist tweaking, and a few heuristics. Right now, this approach is limited to offline animation only. Still, it was very impressive to see the results of the genetic evolution of bipedal motion and hear what type of troubles they had to go through to generate them (they had to resort to using a stabilizing controller like training wheels on a bicycle to be able to past some of the local maxima that were preventing further evolution in the right direction).

Of the three approaches, I’m really excited by the data-driven techniques. I really believe that we can start applying some of those techniques in games relatively soon (this next generation of consoles even). The big advantage it has is that it doesn’t just generate a plain walking animation. You can feed it whatever type of specific type of animation you want: sneaking walk, female walk, big guy walk, etc. You can then adapt those type of animations to your game and get the style you wanted perfectly integrated in your game.

Finally, Tom Forsyth‘s talk on “How to Walk†was a very down to earth, practical and well illustrated. Basically, he started by saying that most games out there right now have terrible walk cycles. That’s particularly a problem for third-person games, where the player is constantly seeing his avatar move around the world. It’s a topic he’s particularly well-qualified to talk about since he’s currently developing Granny. The key idea from his talk is that the animation should not drive the movement of the character in the world. It should always be the game that is moving the character, and the animation system should do its best to keep up and minimize artifacts (the only situation when this was not true is when moving the character up and down over a flight of stairs or some other discontinuity, or during cutscenes, when the player is not in control). He explained how to effectively blend animations (make sure they’re synched), how to use leaning forwards, backwards, and to the sides when the character changes direction or speed, etc. He showed all these techniques with a neat little demo that will probably be bundled with the Granny SDK.

That was a lot of stuff for just two days! Every night my brain would be full, ready to explode, but it was really a great session. It was a great mix of current academic research projects, movie effects, and current application to games. But that wasn’t the end. The next two days we would switch gears and cover engine and tools technology. I’ll talk about those in the next article.

The next red herring

I was hoping this article, “2004 GameTech Report: Creating Believable Characters”, was going to be about story and or character behavior and A.I. having just played Half Life 2 in which most of the characters are unbelieable because of bad writing and …

NaturalMotion

先日, “Games from Within” の Noel Llopis 氏が,先月終わりから今月初めにかけてサンフラン&#…

Game Tech 2004

올해 11월 30~12월 3일간 샌프란시스코에서 Game Tech 라는 세미나가 있었습니다.

Heard on the GA street